Machine learning models teach themselves, but have limits

Scientists at Duke University have made impressive strides in the field of machine learning through their development of a technique called yoked learning. By pairing two machine learning models—one that gathers data and another that analyzes it—researchers believe they can improve the effectiveness of machine learning models. This new technique could potentially make it easier for researchers to use machine learning algorithms in the search for new therapeutics or other materials.

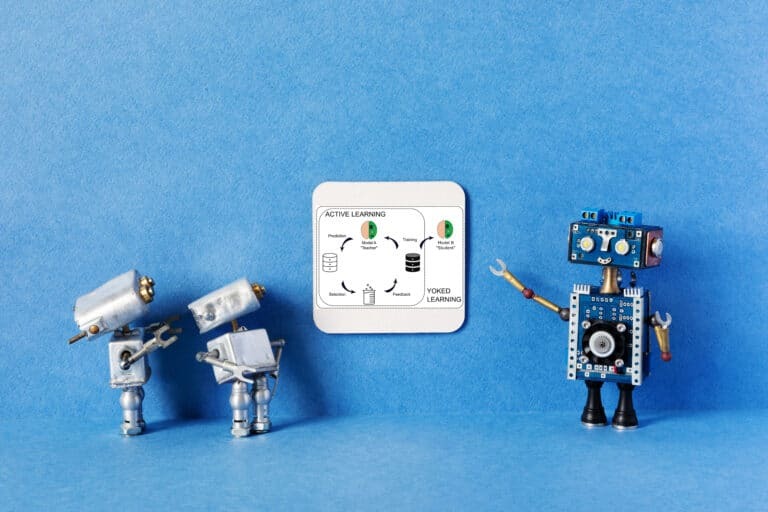

The proposed method, dubbed YoDeL, leverages yoked learning to combine a deep neural network model with an active machine learning algorithm acting as the teacher that guides the data acquisition for the deep neural network 'student'. This technique is meant to overcome the limitations of active machine learning when it is applied to more complex deep neural networks. However, skeptical experts in the field warn that even YoDeL has its own limitations, and caution against overly optimistic projections.

While traditional machine learning models use a dataset to make predictions—a method that is often effective—these models come with limitations. They are bound by the datasets used to train them, which may often lack key information, introducing bias that can affect their accuracy. Although active machine learning is highly effective for machine learning models, applying this technique to more complex deep neural networks remains a challenge. These deep learning models require far more data and supercomputing power than is often available, limiting their accuracy and efficacy.

Furthermore, deep neural networks can learn molecular characteristics without human intervention, making them useful for applications in molecular machine learning. But even these models require large datasets to train on, and incorporating active learning into these models is difficult because it requires retraining of the system each time it gathers a new datapoint, which is practically infeasible.

Despite the mixed reviews on YoDeL, its speed, which takes only a few minutes to complete when deep active learning takes hours or even days, makes it worth watching. As Daniel Reker, assistant professor of biomedical engineering says, the YoDeL's ability to harness the strengths of classical machine learning models to enhance the efficacy of deep neural networks is an exciting tool in a field that is always evolving. At the same time, experts call for the thorough examination of YoDeL to accurately evaluate the technique's effectiveness in practical applications.

POPULAR

- NASA predicts the Sun's corona behavior, revealing its mysteries using advanced computational methods

- Australia on track for unprecedented, decades-long megadroughts: Supercomputer modeling raises concern

- Revolutionizing precision agriculture: The impact of Transformer Deep Learning on water, energy demands

How to resolve AdBlock issue?

How to resolve AdBlock issue?