LATEST

NASA supercomputer powers groundbreaking black...

Mon, May 6th 2024A new NASA visualization created on a...

Disheartening news from space: Webb Telescope...

Thu, May 2nd 2024According to the latest reports from NASA's James...

Enhancing water supply predictions through...

Wed, May 1st 2024Introduction: In a significant breakthrough, a...

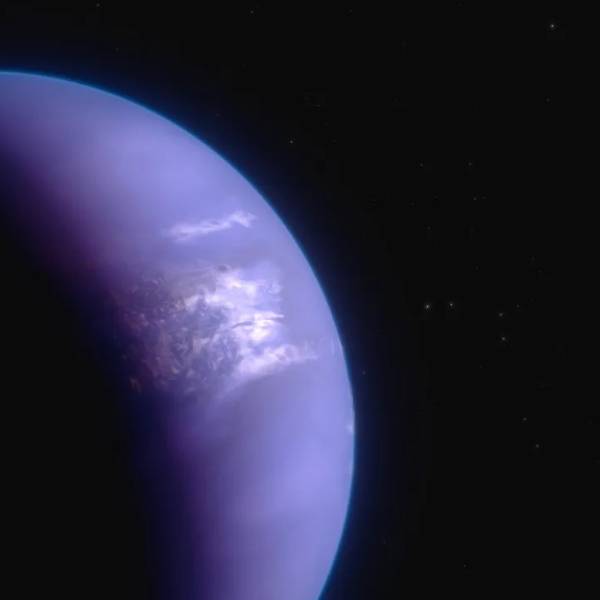

Unveiling the mysteries of distant worlds: NASA's...

Wed, May 1st 2024Introduction: In the vast expanse of the universe,...

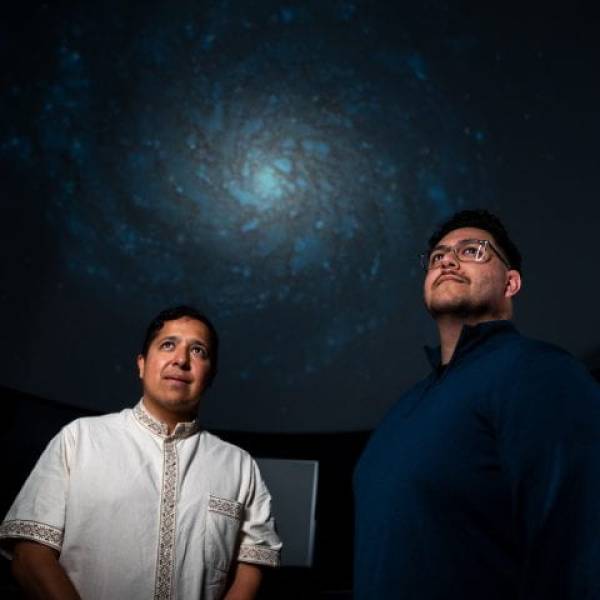

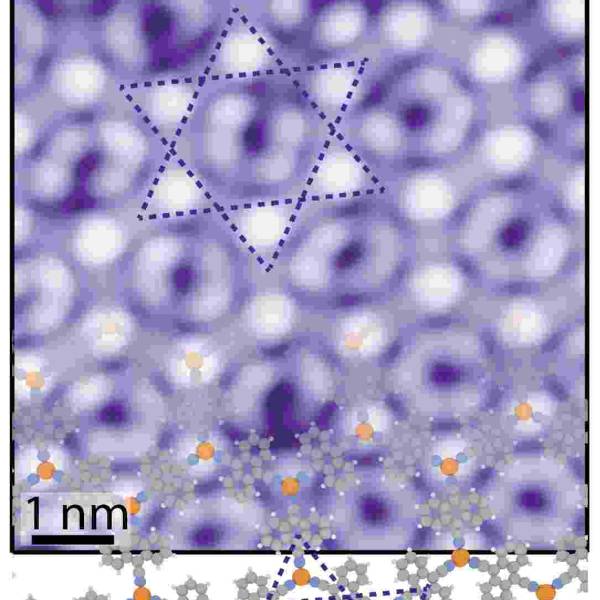

Shedding light on dark matter: Astronomers use...

Mon, Apr 29th 2024Introduction: The last century of scientific...

How to resolve AdBlock issue?

How to resolve AdBlock issue?