In the constantly evolving field of technology, reports of groundbreaking advancements are not uncommon. The recent announcement from the University of Minnesota Twin Cities regarding the development of a cutting-edge hardware device that claims to revolutionize artificial intelligence (AI) by significantly reducing energy consumption has raised eyebrows and invited skepticism.

The research introduces a device that reportedly has the potential to reduce energy consumption for AI computing applications by a factor of at least 1,000. Such a bold assertion demands closer scrutiny, especially given the increasing demand for energy-efficient AI solutions in today's digital landscape.

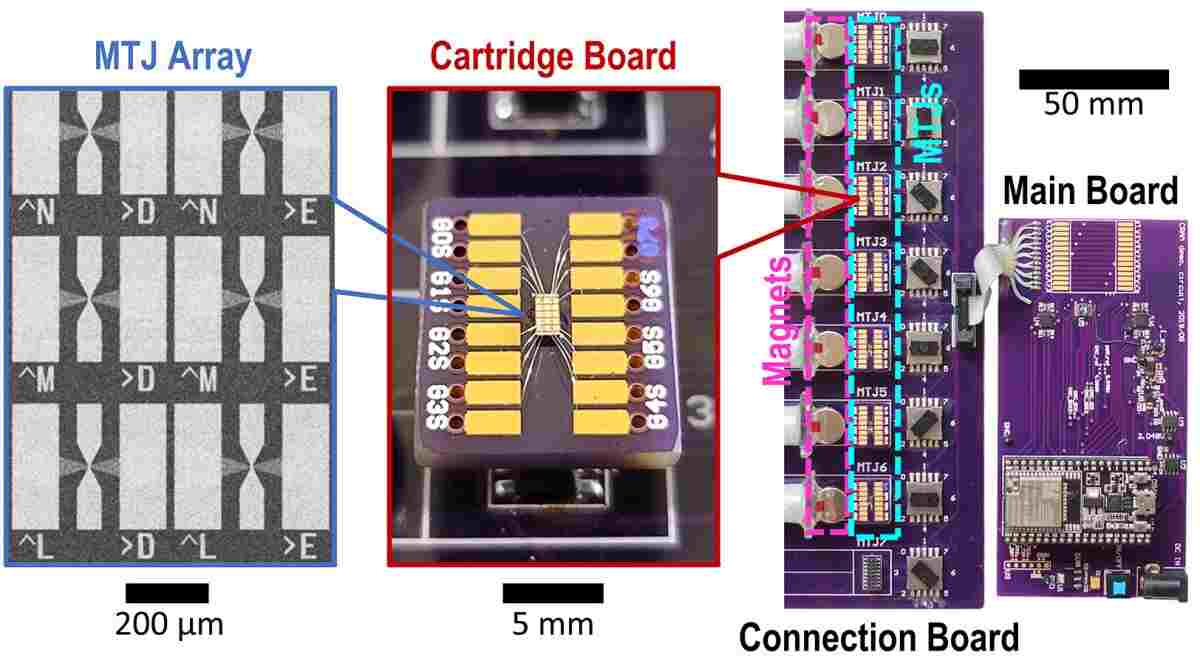

The concept of computational random-access memory (CRAM) presented by the researchers as a novel approach, where data processing occurs entirely within the memory array, is intriguing but also questionable. The idea that data never leaves the memory, thereby minimizing power and energy consumption, sounds almost too good to be true.

The researchers point to projections from the International Energy Agency (IEA) forecasting a substantial increase in energy consumption for AI applications in the coming years. While the potential impact of reducing energy usage in AI by orders of magnitude is undoubtedly desirable from an environmental and economic standpoint, one cannot help but wonder about the feasibility and practicality of such claims.

Dr. Jian-Ping Wang, the senior author of the research paper, acknowledges the long journey and interdisciplinary collaboration that led to the development of this technology. However, the notion that a two-decade-old concept once deemed "crazy" has now materialized into a game-changing innovation raises questions about the reliability and objectivity of the claims being made.

Furthermore, the involvement of industry partners and plans to work towards large-scale demonstrations with semiconductor leaders may suggest a potential commercial motive driving the enthusiasm around this new hardware device. It is essential to critically evaluate the research and consider whether it genuinely delivers on the promised energy efficiency gains for AI applications.

While the implications of this technology, if proven effective, could be significant in advancing AI capabilities while reducing environmental impact, a healthy dose of skepticism is warranted when evaluating the validity and practicality of such claims. As we navigate the complex landscape of supercomputing and emerging technologies, a cautious approach to embracing innovations is crucial to ensure that promises align with reality.

As the research progresses and the technology undergoes further scrutiny and validation, the question remains: Is this state-of-the-art device truly a game-changer for energy-efficient artificial intelligence, or is it another overhyped contribution to the ever-expanding field of technological advancements?

How to resolve AdBlock issue?

How to resolve AdBlock issue?