GOVERNMENT

How great is the influence, risk of social, political bots?

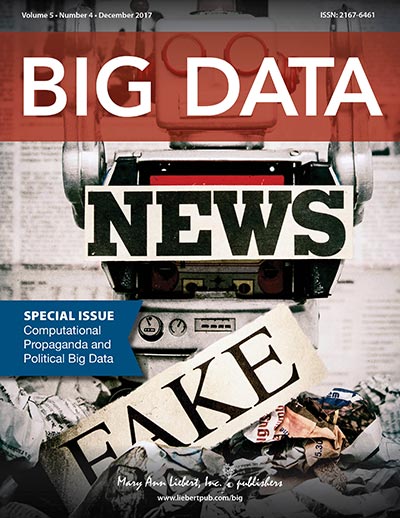

The role and risks of bots, such as automated Twitter accounts, in influencing public opinion and political elections continues to provoke intense international debate and controversy. An insightful new collection of articles focused on “Computational Propaganda and Political Big Data” examines how these bots work, approaches to better detect and control them, and how they may have impacted recent elections around the globe. The collection is published in a special issue of Big Data, a peer-reviewed journal from Mary Ann Liebert, Inc., publishers, and is available free on the Big Data website.

Guest Editors Philip N. Howard, PhD and Gillian Bolsover, DPhil, from University of Oxford, U.K. have compiled a compelling series of articles that provide a broad range of perspectives and examples on the use of bots in social and online media to achieve massive dissemination of propaganda and political messages.

In the article entitled "Social Bots: Human-Like by Means of Human Control?" coauthors Christian Grimme, Mike Preuss, Lena Adam, and Heike Trautmann, University of Münster, Germany offer a clear definition of a bot and description of how one works using Twitter as an example. The authors examine the current technical limitations of bots and how the increasing integration of humans and bots can expand their capabilities and control, leading to more meaningful interactions with other humans. This hybridization of humans and bots requires more sophisticated approaches for identifying political propaganda distributed with social bots.

The article "Detecting Bots on Russian Political Twitter," coauthored by Denis Stukal, Sergey Sanovich, Richard Bonneau, and Joshua Tucker from New York University, presents a novel method for detecting bots on Twitter. The authors demonstrate the use of this method, which is based on a set of classifiers, to study bot activity during an important period in Russian politics. They found that on most days, more than half of the tweets posted by accounts tweeting about Russian politics were produced by bots. They also present evidence that a main activity of these bots was spreading news stories and promoting certain media outlets.

Fabian Schäfer, Stefan Evert, and Philipp Heinrich, Friedrich-Alexander-Universität Erlangen-Nürnberg, Germany, evaluated more than 540,000 tweets from before and after Japan's 2014 general election, and present their results on the identification and behavioral analysis of social bots in the article entitled "Japan's 2014 General Election: Political Bots, Right-Wing Internet Activism, and Prime Minister Shinzō Abe’s Hidden Nationalist Agenda." The researchers focused on the repeated tweeting of nearly the identical message and present both a case study demonstrating multiple patterns of bot activity and potential methods to allow for automation identification of these patterns. They also provide insights into the purposes behind the use of social and political bots.

“Big Data is proud to present the first collection of academic articles on a subject that is front and center these days,” says Big Data Editor-in-Chief Vasant Dhar, Professor at the Stern School of Business and the Center for Data Science at New York University. “While social media platforms have created a wonderful social space for sharing and publishing information, it is becoming clear that they also pose significant risks to societies, especially open democratic ones such as ours, for use as weapons by malicious actors. We need to be mindful of such risks and take action to mitigate them. One of the roles of this special issue is to present evidence and scientific analysis of computational propaganda on social media platforms. I’m very pleased with the set of articles in this special issue.”