SCIENCE

Breakthrough Fusion Simulations Shed Light on Plasma Confinement

Leadership-class computing helps develop commercially viable, clean energy

A research team led by William Tang of the Department of Energy’s (DOE’s) Princeton Plasma Physics Laboratory (PPPL) is developing a clearer picture of plasma confinement properties in an experimental device that will pave the way to future commercial fusion power plants.

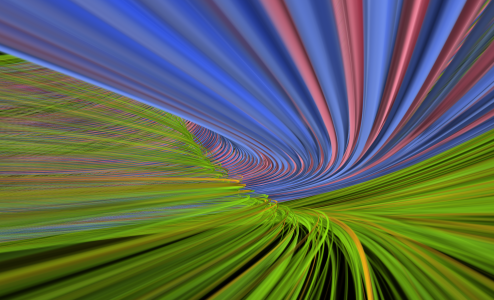

A global particle-in-cell simulation uses Weixing Wang’s GTS code to show core turbulence in a tokamak. Image courtesy Stephane Ethier, PPPL

Tang, who is also a professor at Princeton University, focuses on advanced simulation capabilities relevant to ITER, a multibillion-dollar international experimental device being built in France and involving the partnership of seven governments representing more than half of the world’s population.

If ITER is capable of sustaining effective fusion reactions, in which the fusion energy produced exceeds the input energy by more than an order of magnitude, humanity may well be on its way to a clean, safe, and limitless source of energy. Over the past 3 years using resources of the Argonne Leadership Computing Facility (ALCF) and Oak Ridge Leadership Computing Facility (OLCF), Tang’s team has made continual improvements to tools essential for computationally solving fusion problems.

Fusion of light nuclides (e.g., deuterium and tritium) forms the bulk of steady energy release in the universe. To build the scientific foundations needed to develop fusion as a sustainable energy supply, a key component is the timely development of an integrated high-fidelity simulation with experimentally validated predictive capability for magnetically confined fusion plasmas. Using magnetic fields is the most efficient approach to confining a plasma under conditions necessary to sustain a fusion reaction. An associated challenge is understanding, predicting, and controlling instabilities caused by microturbulence in such systems. By significantly increasing the transport rate of heat, particles, and momentum across the confining magnetic field in a tokamak such as ITER, microturbulence can severely limit the energy confinement time and therefore a machine’s performance and economic viability. Understanding and possibly controlling the balance between these energy losses and the self-heating rates of the fusion reaction are essential to ensuring the practicality of future fusion power plants.

“Since you’re never going to get rid of all the thermodynamic ‘free energy’ in a hot, magnetically confined plasma,” Tang said, “the losses generated by the persistent presence of plasma microturbulence feeding off of this free energy will ultimately determine the size and cost of a fusion reactor.”

Tang’s team includes Mark Adams of Columbia University, Bruce Scott of Max-Planck Institut für Plasmaphysik, Scott Klasky of Oak Ridge National Laboratory (ORNL), and Stephane Ethier and Weixing Wang of PPPL. DOE’s Office of Fusion Energy and the National Science Foundation’s G8 Exascale Project fund the research.

Tang and his colleagues are working to understand how to control and mitigate microturbulence to help ensure an economically feasible fusion energy system. Their investigations focus on two codes, GTC-P and GTS, which are both global particle-in-cell kinetic codes modeling the motion of representative charged particles and the interactions of those particles with the surrounding electromagnetic field.

This research aligns with the 20-year DOE priority to support ITER for developing fusion energy. The associated effort requires development of codes that can scale to huge numbers of processors and thereby enable efficient use of powerful supercomputers. Tang’s experience working with some of the world’s most powerful supercomputers is likely to serve him well in his new role as the US principal investigator for a new international G8 Exascale Project in fusion energy. This high-performance computing (HPC) collaboration involves the United States (Princeton University with hardware access at Argonne National Laboratory), European Union (United Kingdom, France, and Germany with hardware access at the Juelich Supercomputing Centre), Japan, and Russia. It will explore the viable deployment of application codes as platforms move toward the exascale (supercomputers capable of a million trillion calculations per second).

Scaling to new heights

Enabled by the Innovative and Novel Computational Impact on Theory and Experiment program, the leadership-class computing allocations to Tang, Ethier, Wang, and other colleagues—8 million processor hours on the OLCF’s Jaguar supercomputer and 2 million at the ALCF’s Intrepid supercomputer in 2008, 30 million hours at the OLCF and 6 million on the ALCF in 2009, and more than 45 million at the OLCF and 12 million at the ALCF in 2010—have allowed the team to improve its algorithms. Its scaling efforts helped the researchers make the most of HPC systems and culminated by demonstrating that the GTC-P code efficiently scaled up to use the full 131,072 cores of Intrepid.

Overall this research has produced HPC capacities for capturing insights into microturbulence, moving researchers closer to answering the key question of how turbulent transport and associated confinement characteristics differ in present-generation laboratory plasmas versus ITER-scale burning plasmas, which are at least three times larger. Continuing to successfully develop their codes, the researchers incorporated radial domain decomposition capability in a project led by coinvestigator Adams. Results from this effort led to unprecedented efficiency for scaling turbulent transport from relatively small present-generation experiments (in which confinement is observed to degrade with increasing reactor size) to large ITER-scale plasmas. This capability is in turn enabling scientists to gain new insights into the physics behind the expected favorable trend whereby the plasma confinement will cease to degrade as plasma size increases.

Tang commented that the impressive muscle of leadership computing facility machines is related to Moore’s Law, an empirical observation that computing power doubles every 18 months and is projected to do so until 2015 or so. “Since every simulation is inherently imperfect, you will want to continue to increase the physics fidelity of any advanced code, be it in physics, chemistry, climate modeling, biology, or any other application domain. So you will always have—and Mother Nature demands that you will always have—a voracious appetite for more and more powerful computational capabilities,” Tang said. “You have to come up with more and more creative algorithms that allow you to tap the compute power of the increasingly complex modern systems.”

If the team can continue to successfully make use of such systems, the resulting large-scale simulations will create large amounts of data that will clog the flow of information into and out of processing cores, Tang pointed out. Accordingly, his team includes ORNL computational scientist Scott Klasky, the lead developer of the Adaptive Input/Output System, or ADIOS. This middleware expedites the movement of data into and out of the computer and to the I/O system. The contribution means HPC systems spend more time computing scientific answers and less time transferring data.

As ITER moves to the operational phase, the ability to use leadership computing resources to promptly harvest physics insights from such applications will be essential in guiding the experimental campaign.

How to resolve AdBlock issue?

How to resolve AdBlock issue?