STORAGE

An Update on Research Directed at Revolutionizing Tornado Prediction

Tornadoes, the most violent of storms, can take lives and destroy neighborhoods in seconds, and every state in America is at some risk for this hazard. Since the southern states are in the midst of peak tornado season (March through May), with the northern states to experience the same soon (late spring through early summer), an update on the tornado modeling and simulation research of Amy McGovern of the University of Oklahoma (OU) is especially timely.

The goal of McGovern’s research project is to revolutionize the ability to anticipate tornadoes by explaining why some storms generate tornadoes and others don’t, and by developing advanced techniques for analyzing data to discover how the twisters move in both space and time.

“We hope that with a more accurate prediction and improved lead time on warnings, more people will heed the warnings, and thus loss of life and property will be reduced,” she says.

McGovern’s research is funded by the National Science Foundation’s (NSF) Faculty Early Career Development Program, a prestigious award that supports junior faculty “who exemplify the role of teacher–scholars through outstanding research, excellent education and the integration of education and research within the context of the mission of their organizations.”

In May 2011, the National Institute for Computational Sciences (NICS) reported on McGovern’s research. The article was published in the wake of a severe weather rampage consisting of almost 200 tornadoes that the ravaged the southern United States, killing more than 315 persons and causing billions of dollars in damages.

To provide the project with insight into the types of atmospheric data that are most important in storm prediction, McGovern has formed collaborative relationships with researchers from OU’s School of Meteorology, the National Severe Storms Laboratory and the National Center for Atmospheric Research.

A Better Numerical Model

Getting useful, reliable data from simulations requires a stable model, and McGovern says that finding and implementing the model called CM1 as a replacement for the one they were using (known as ARPS) has been the team’s biggest success since NICS last reported on the research project.

She and OU meteorology graduate student Brittany Dahl say that CM1 is very reliable at the high resolutions required for tornadic simulations. ARPS was designed for coarser domains. Switching to CM1 has enabled them to focus on the science more intensely, rather than the workflow.

Simulating Storms

The storms that McGovern and her team create aren’t ones that have happened in real life but are based on conditions usually seen in a good environment for a tornado to develop.

Dahl explains, “We start by using a bubble of warm air to perturb the atmosphere in the simulation and set the storm-building process in motion. From there, the equations and parameters we’ve set in the model guide the storm’s development.”

The team is trying to fashion storms that are more realistic by adding friction — the influence of such things as grass and other elements on the ground — as a variable, but doing so isn’t easy. Friction is very difficult to represent in the model as a storm begins to spin in pronounced fashion.

Improving Warn-on-Forecast

Work on Dahl’s master’s thesis will be the focus of the research project between now and December, and the outcome is expected to be a significant finding in storm prediction.

{hwdvs-player}id=466|height=340|width=400|tpl=playeronly{/hwdvs-player}

Dahl explains that the National Weather Service has the goal of implementing an approach to early warning called Warn-on-Forecast, which involves using models to create forecasts on the computer. She says running the models can provide sufficient assurance as to whether a storm is going to produce a tornado so that people can be informed 30 minutes to an hour ahead of time. However, she points out that the simulations take a lot of computing power.

“Warn-on-Forecast can be implemented sooner if the forecast models are able to run at a lower resolution,” she says. “I think the current goal is half a kilometer horizontal resolution.”

Dahl’s thesis will explore whether patterns that indicate a tornado is forming or will form in the future can be found at the coarser (and thus less-computationally intensive) resolution. The investigation will also require the creation of high-resolution images for comparative analysis.

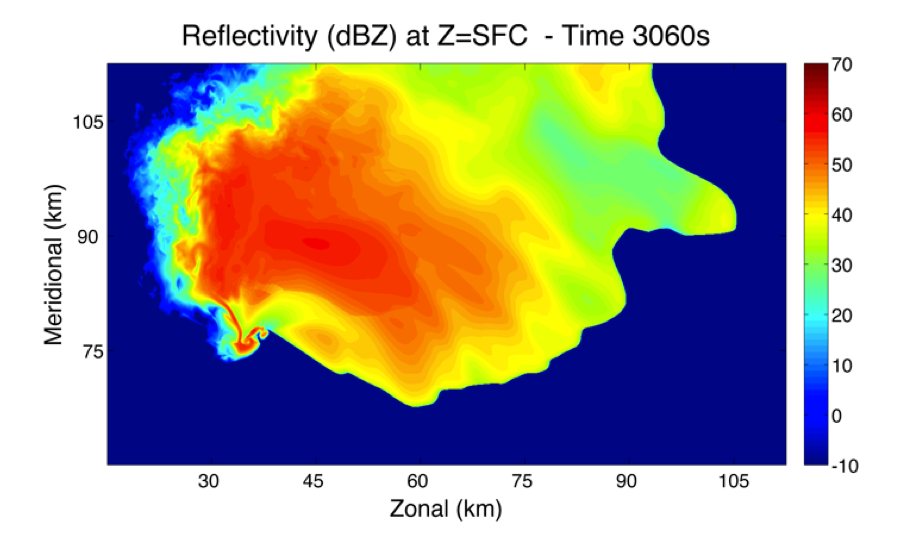

“The idea is to look at 50-meter, high-resolution versions of the storm to observe the strength and longevity of the tornadoes that they produce, and then compare that with our run of the same storm at 500-meter resolution and determine if any patterns can be picked up at the coarser resolution that are connected to what we see at the fine resolution,” Dahl says.

“She wants to hone in on what she’s looking for that actually generates the tornado, and the only way you can confirm that is to make the high-resolution simulations,” McGovern explains. “Those are not feasible to do all across the U.S. right now on a Warn-on-Forecast basis. We are running on 112 by 112 kilometer domain; now scale that up to the U.S. and ask it to run in real time. We’re not quite there yet.”

Dahl says that she just may find that 500-meter resolution may not be sufficient to reveal whether a tornado is going to happen.

“Even if that turns out to be the case, it’s still a finding,” McGovern says. “It would tell them they couldn’t do this and need to move on to 100 meters or other resolution.”

Kraken and Nautilus

To run each of her simulations, Dahl is using 6,000 processor cores and 10 compute service units (hours) on the Kraken supercomputer. Trying to do the simulation on a machine with 24 to 36 computing cores, for example, would probably take a week, McGovern says.

“In one recent week I ran three simulations on Kraken and could have done more, too,” Dahl says. “It’s just that I pace myself so I can keep up with the post-processing.”

Kraken and the Nautilus supercomputer provide the disk space that the project simulations require. One simulation can produce more than a terabyte of data.

“What’s nice about having Kraken and Nautilus connected together is that it makes it a lot easier to transfer the data over to where you can use Nautilus to analyze it,” Dahl says.

Data Mining

“The biggest thing that Nautilus does for us right now is process the data so that we can mine it, because we’re trying to cut these terabytes of data down to something that’s usable metadata,” McGovern says. “I am able to reduce one week of computations down to 30 minutes on Nautilus, and post-processing time is reduced from several weeks to several hours.”

Dahl uses Nautilus to visualize the data so that she can watch a time series of what happens for the three hours that a simulation runs and use what she sees as a guide in decision-making. She could decide to change some of the initial conditions for the simulations or choose other areas to investigate. She says she is working on developing a data-mining approach to use later in the project.

The team’s data mining will involve the use of a decision-tree technique that they have developed, McGovern says. It can pose a series of spatial-temporal questions about the relationship of one object in three-space to another object in three-space, and inquire how that relationship changes over time.

“We can ask questions about fields within the object, so if you have an updraft region, which is a three-dimensional object, you can ask how the updraft is changing vertically or horizontally so you can look at the gradient and see how strongly it’s increasing,” McGovern says.

She explains that collaboration with meteorologists is helping to ensure that data is reduced to the most relevant definitions, such as updraft, downdraft, type of downdraft, rain region, hail core, regions of high reflectivity, regions of buoyancy and others.

The interdisciplinary combination of computer science and meteorology is providing the synergy needed to achieve the project goals of accurately predicting tornadoes and improving warning lead time.